What is SageMaker Autopilot

SageMaker Autopilot is a service provided by Amazon that allows you to automatically build and train machine learning models on your data, with relatively little data science knowledge.

It abstracts away the heavy lifting of building machine learning models. Given a labeled data tabular data set and a target column to predict, SageMaker will generate multiple candidate models you can deploy and enhance your platform with.

Additionally, it gives you great visibility into how it generates candidate models and allows you to tweak, modify and train your own variants.

What problems can you use it for?

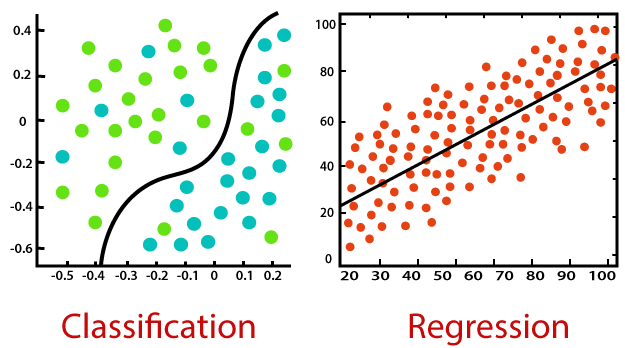

SageMaker Autopilot generates models that either predict values (regression), or predict categories (classification).

Regression algorithms are used to predict the continuous values such as price, salary, age, etc. and classification algorithms are used to predict/classify discrete values such as Cat or Dog, Spam or Not Spam, etc. Autopilot supports both binary and multi-class classification.

Source: https://www.javatpoint.com/regression-vs-classification-in-machine-learning

How does it work?

There are three stages that Autopilot runs through

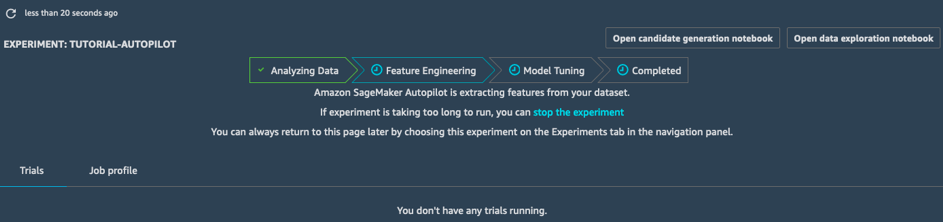

Analyzes Data

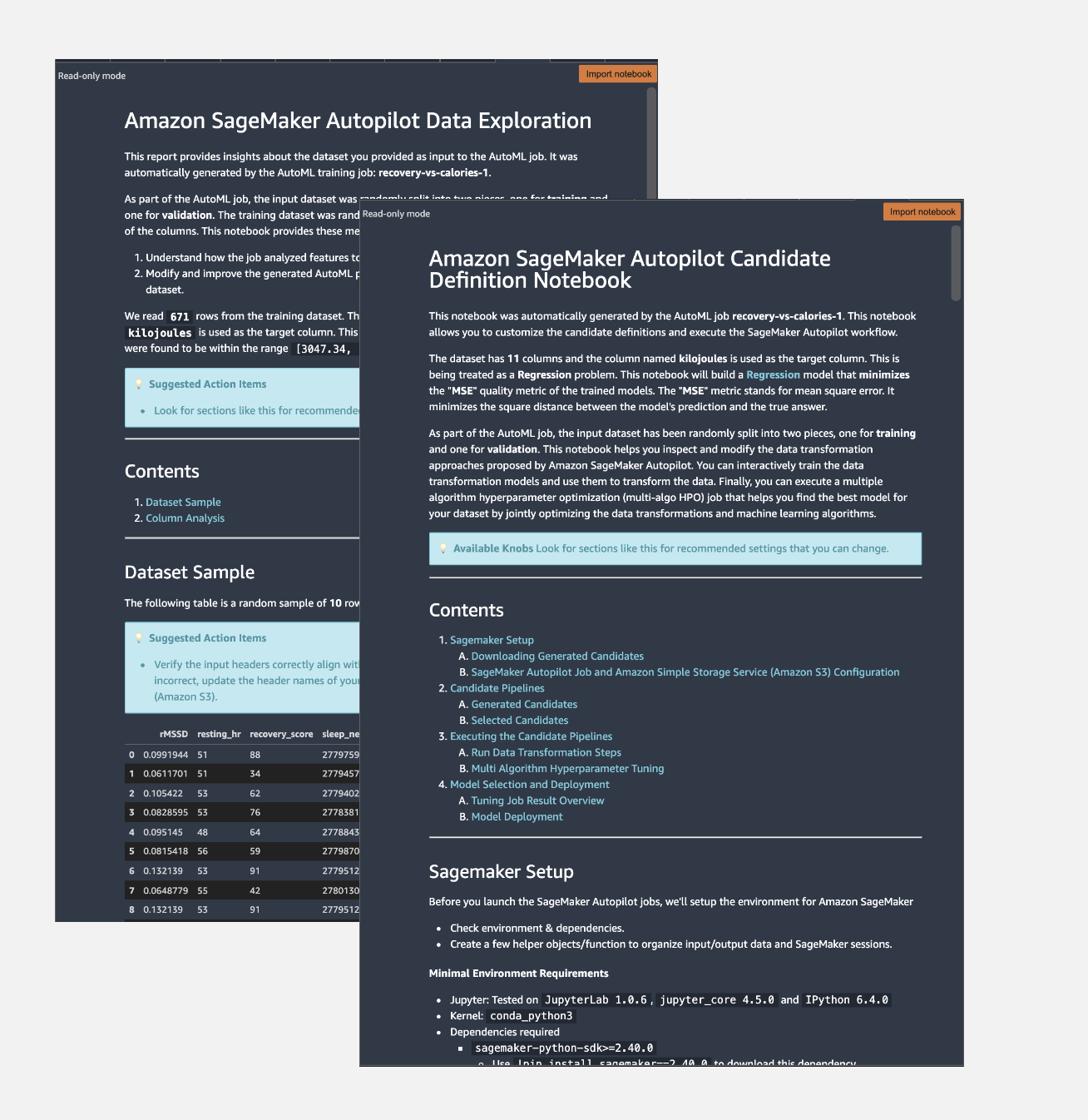

- Produces two notebooks

- Data Exploration

- Candidate Definition Notebook

- Identifies problem type to be solved

- Data pre-processing

- Candidate pipelines

- Using ML model suitable for the problem type supplied or identified

Feature engineering

- Creates training and validation datasets for each pipeline

- Engineers new features like automatically extracting information from non-numeric columns, such as date and time information from timestamps.

Model tuning

- Launches a hyperparameter optimization job for each candidate pipeline

- Runs many training jobs while iterating on combinations of hyperparameters

- Assesses and selects highest performing model

Getting Started

This tutorial assumes basic familiarity with AWS services such as IAM, S3 and EC2

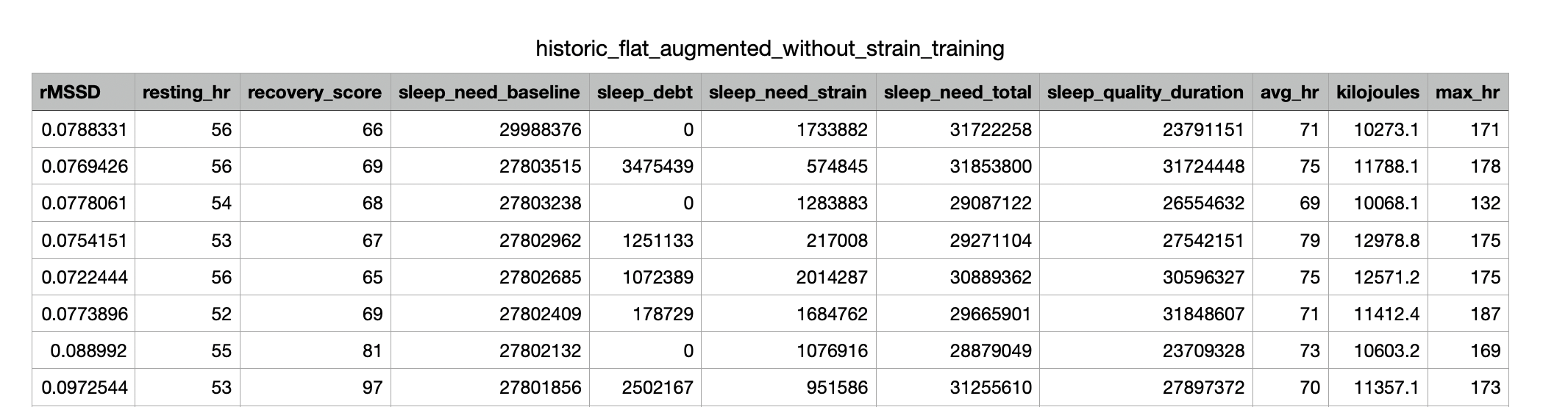

Our use case: Predict caloric intake based sleep, recovery and heart rate data

Model training

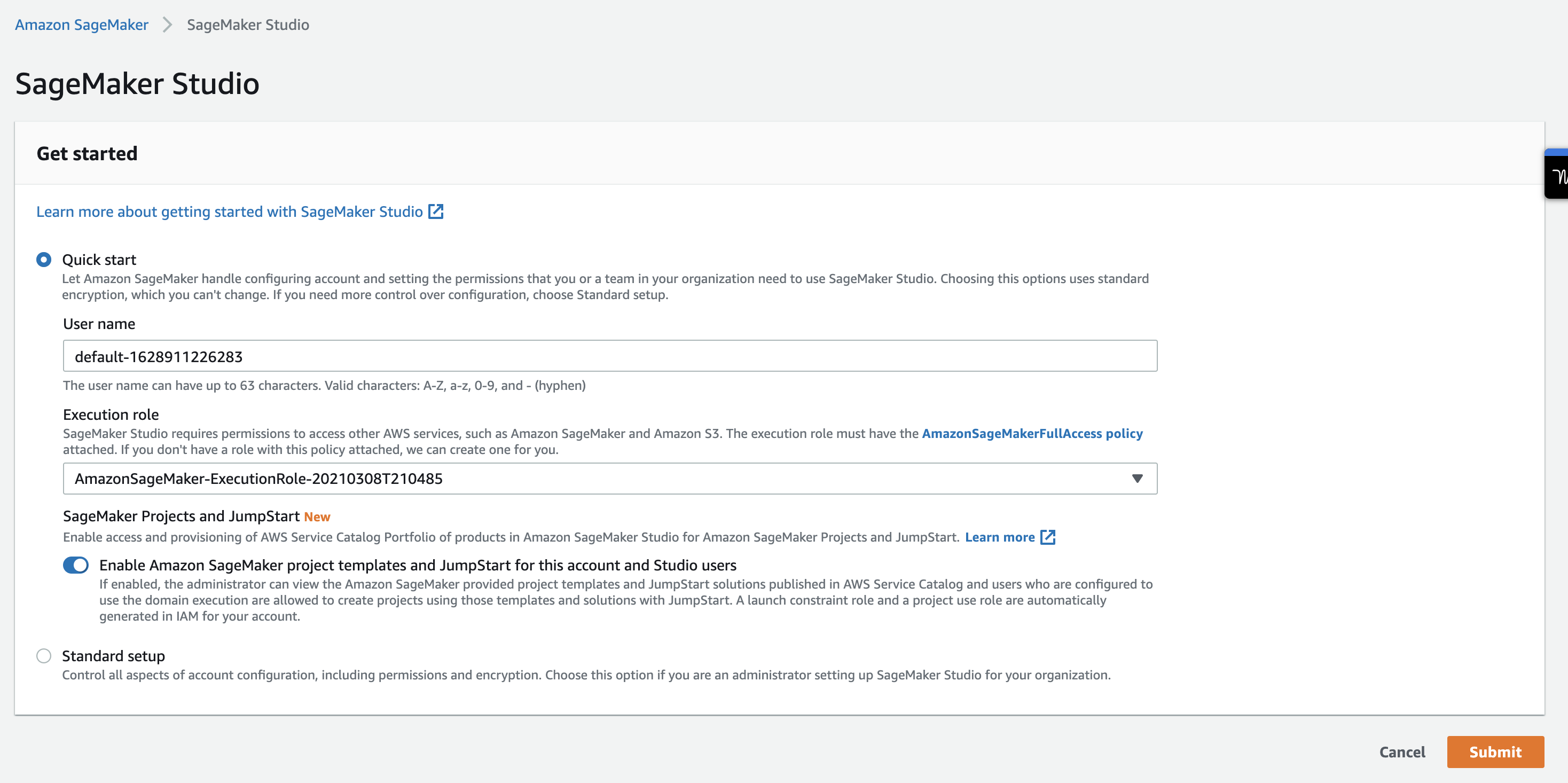

- Create an Amazon Web Services account

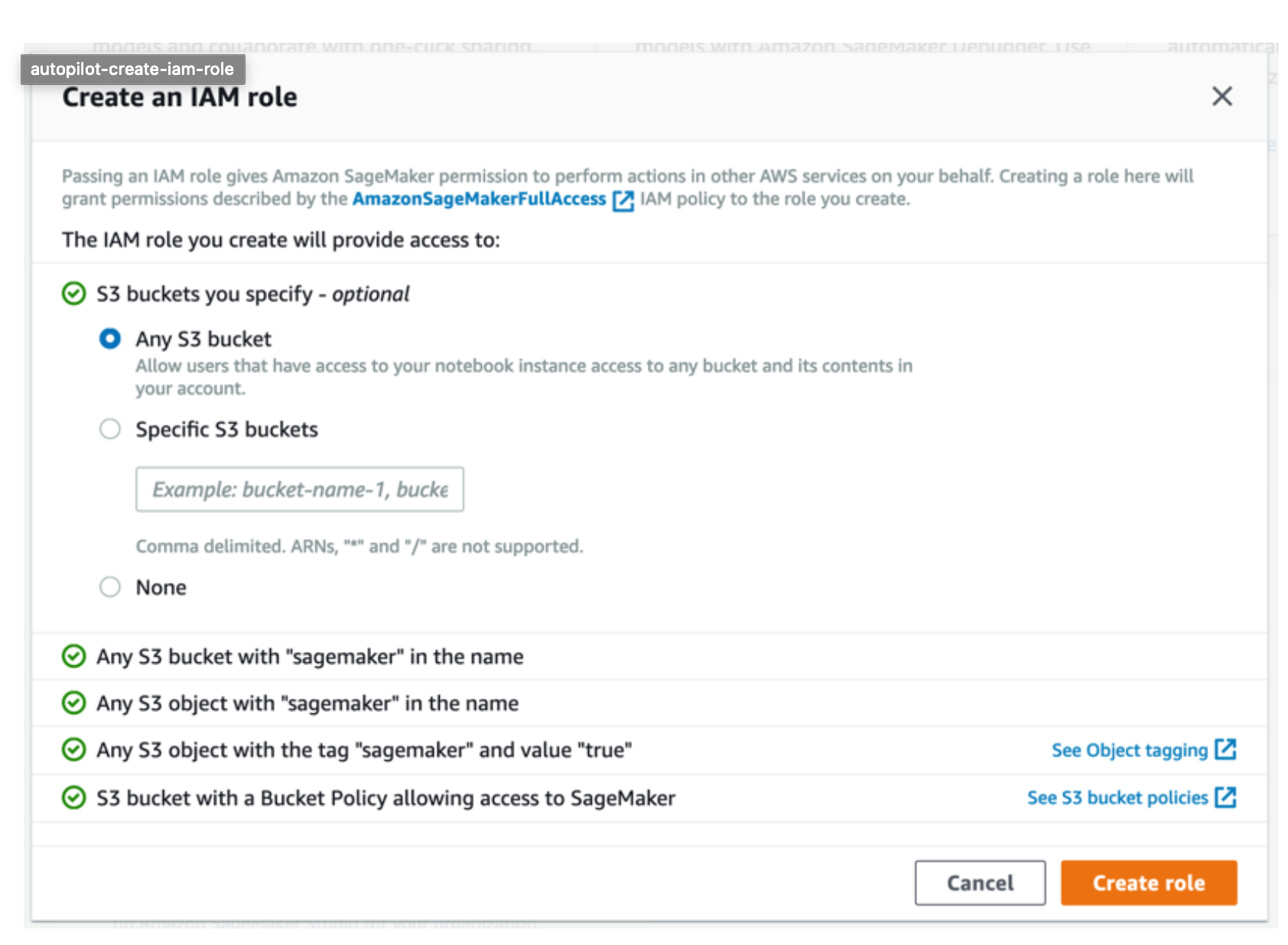

- Setup SageMaker Studio, this is the environment where you can create and run Autopilot experiments

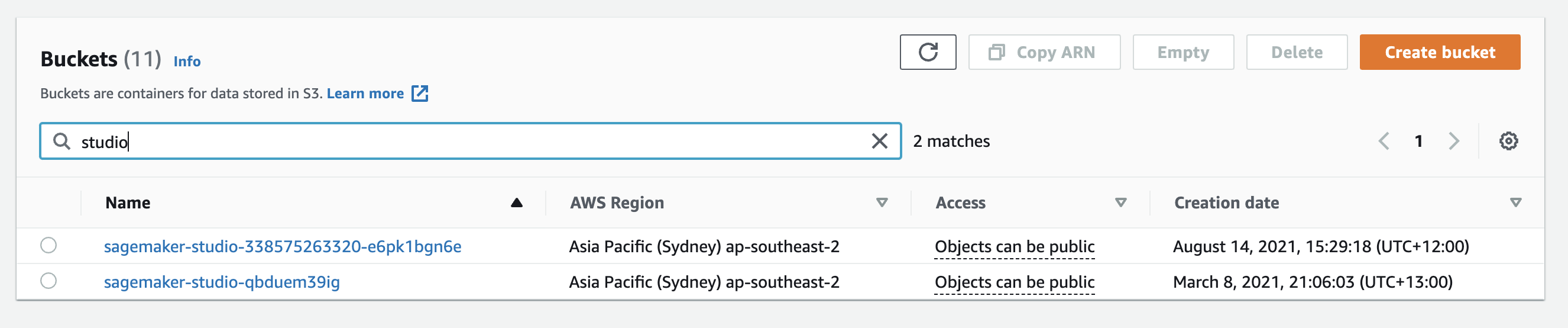

- Upload your labelled data to the S3 bucket your studio created

Autopilot requires you're data be in CSV format and should contain at least 500 rows

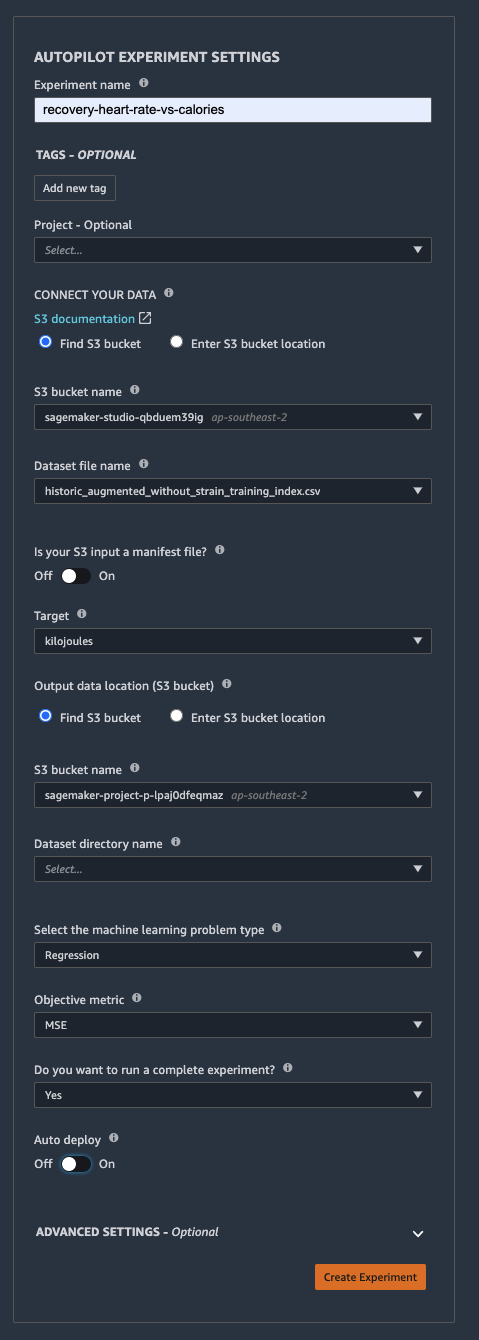

- Launch and configure the experiment

Experiment options

Experiment name:- Should be unique within your account in an AWS Region

S3 Bucket name:- Bucket you uploaded your source data to

- SageMaker must have read permissions to this bucket.

Dataset file name:- Name of your source data file

Target: Specifies which column you want to predictOutput data location:- Where Sagemaker stores trial artifacts

- Sagemaker must have write permissions on this location

Machine learning problem type: specifies the kind of data preprocessing and algorithm selection for Autopilot to useAuto: Autopilot will automatically infer the problem typeRegression: Predicting continuous numeric valuesBinary classification: Classifying two categories i.e Cat or NotMulticlass classification: Classifying more 3 or more categories i.e Cat, Dog or Neither

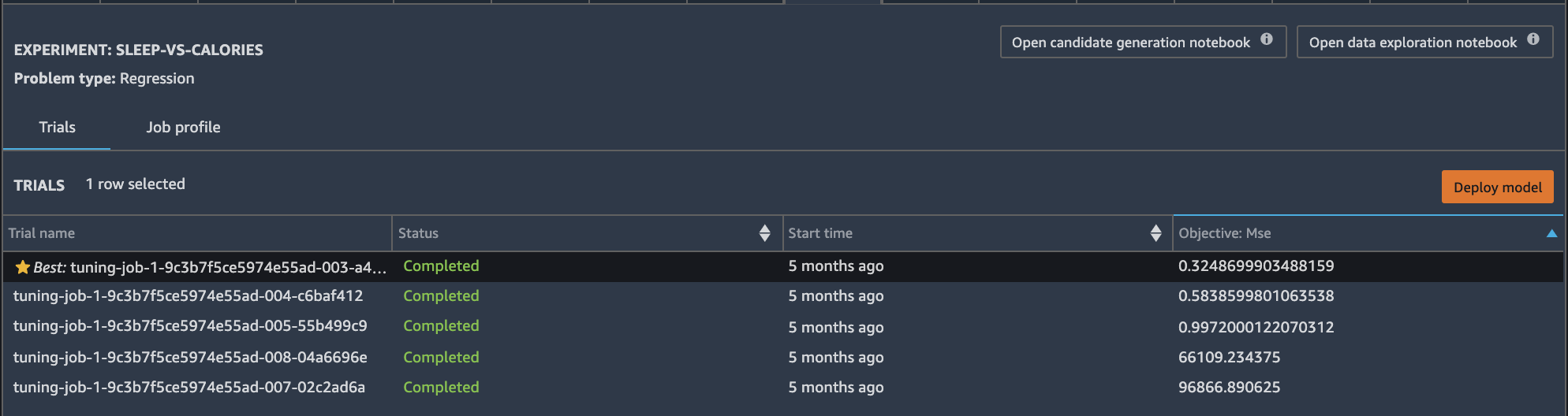

- Run your experiment and view the artefacts generated

Once your experiment is completed you will get a list of candidate models with your best model highlighted.

You can look under the hood and see the data exploration and candidate generation notebook Autopilot used to create your models.

We wont be needing to go into these, but this is where Data Scientists can tweak and modify the approaches created and generate their own models.

Model Deployment

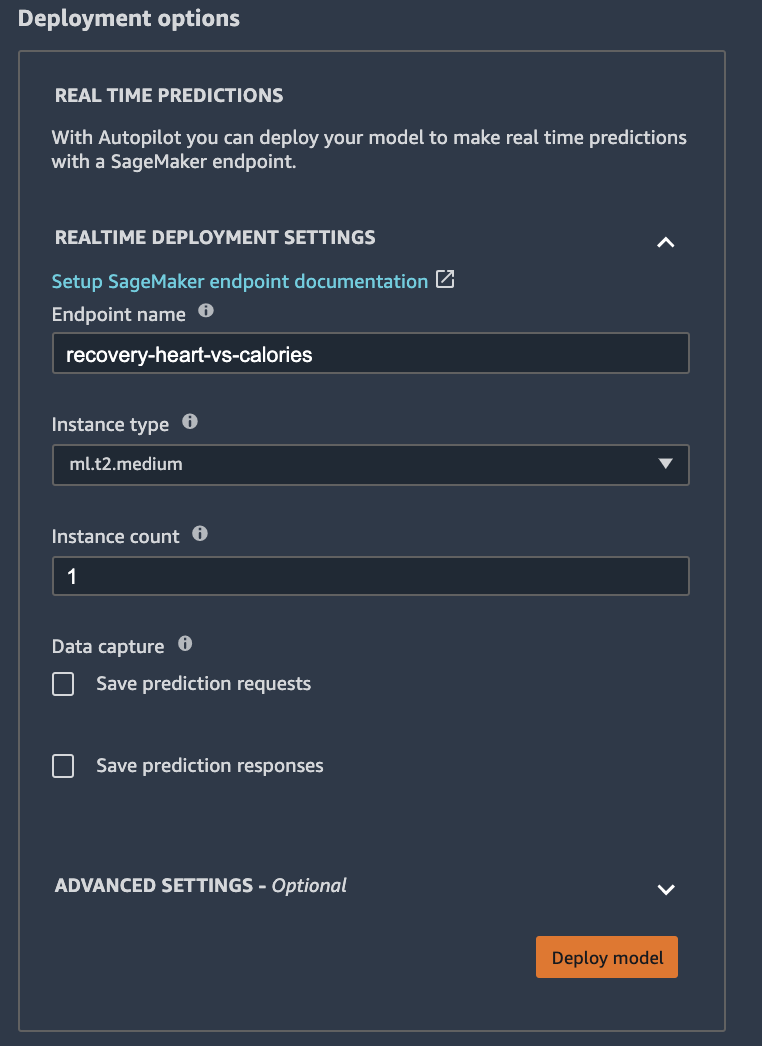

There are two primary modes of model deployment within SageMaker service, Inference Endpoints and Batch Transform jobs.

Inference Endpoint

- Real time predictions

- Model deployed to a virtual server

- automatically handles concurrent requests

Batch Transform Jobs

- Want to get predictions for an entire dataset

- Don't need a persistent endpoint that applications (for example, web or mobile apps) can call to get predictions

- Don't need the sub-second latency that SageMaker hosted endpoints provide

Prediction

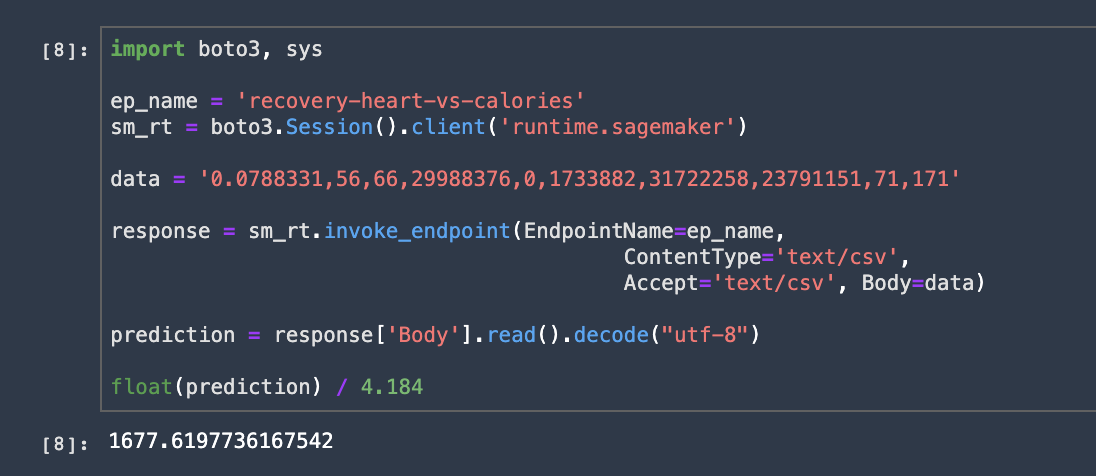

Invoking our endpoint within a Sagemaker Studio Notebook, we can see how we might interact with our API for real-time inference! Cool huh.

And there you have it, your very first Sagemaker machine learning model.